Cloud Native Digital Enterprise: A Reference Architecture

Introduction

Today’s competitive business landscape is pushing organizations to accelerate their digital transformation initiatives—often compressing timelines from years to months. APIs have emerged as the central products of the 21st century, enabling businesses to expose their capabilities in standardized, easily accessible ways.

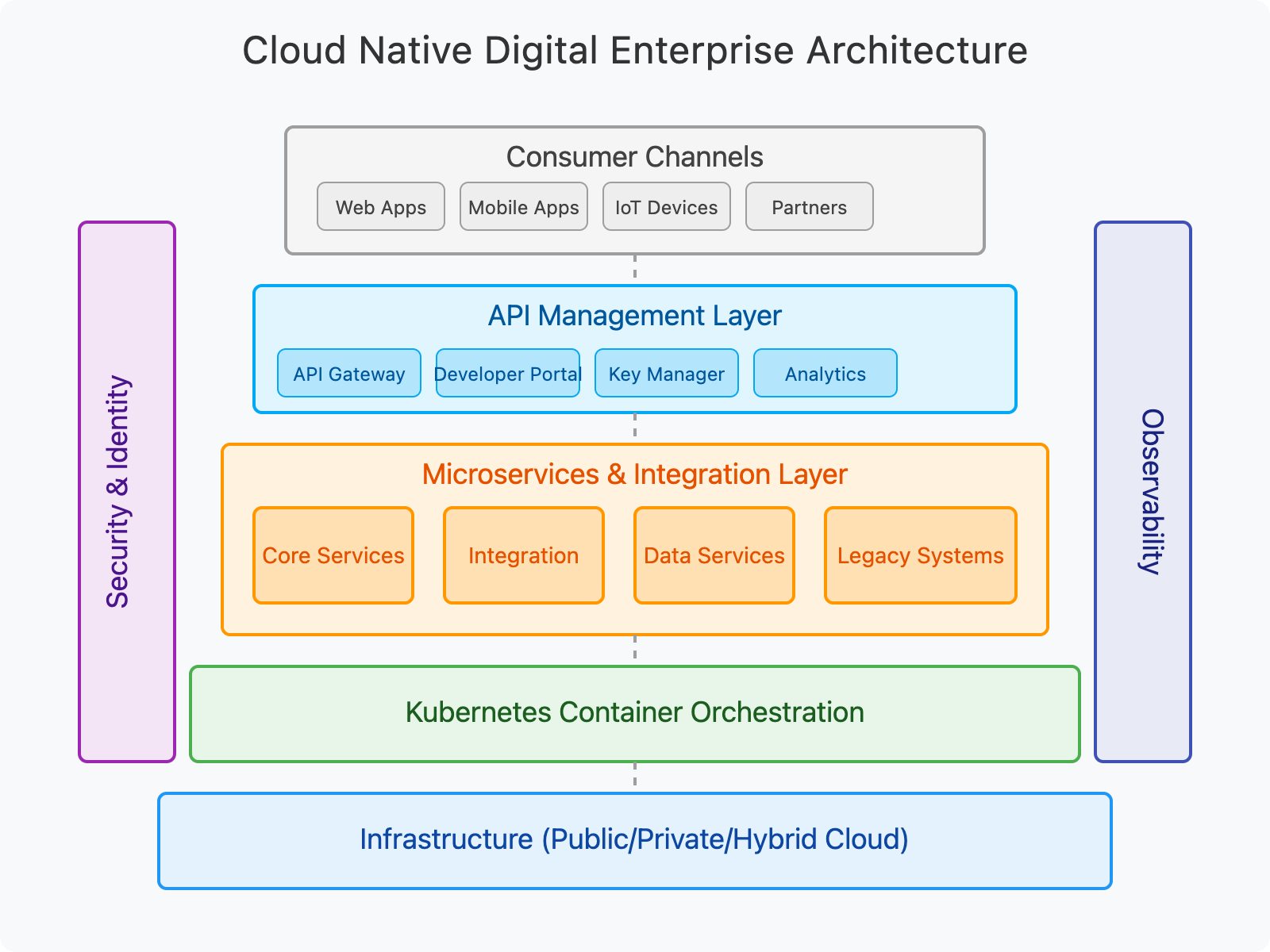

This guide presents a vendor-neutral reference implementation for building a cloud native digital enterprise architecture using two powerful open-source technology stacks: Kubernetes for container orchestration and a modern API-led integration platform. Together, these technologies enable organizations to create an agile, flexible, and scalable architecture through automation and API-driven services.

Cloud Native Digital Enterprise Architecture

Key Components of the Reference Implementation

1. Kubernetes: The Foundation

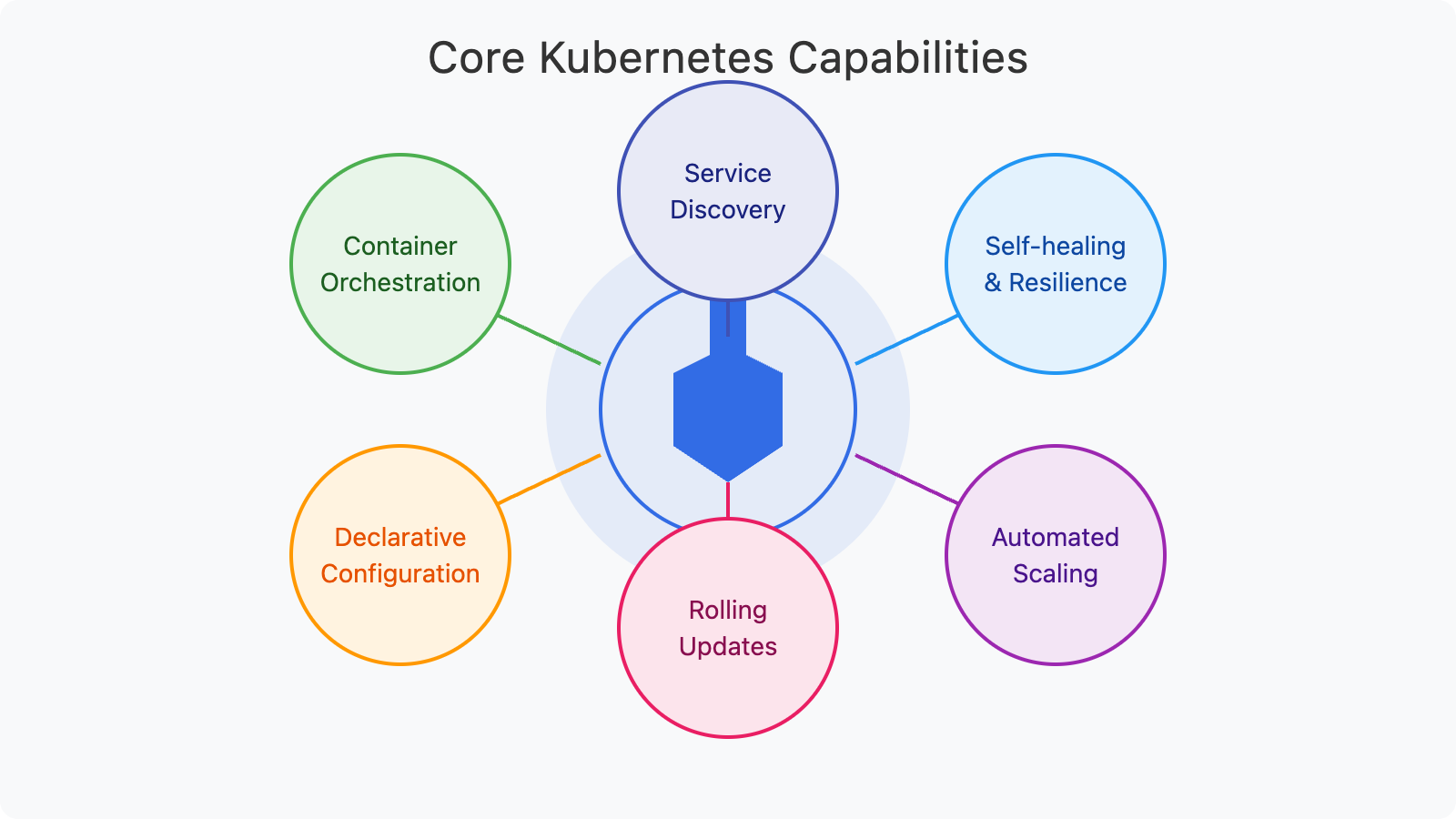

Kubernetes provides the cloud native foundation for our architecture, offering container orchestration, scalability, and operational automation. It serves as an excellent platform for building scalable, flexible solutions that align with business growth.

Core Kubernetes Capabilities:

Kubernetes offers:

- Container Orchestration: Automated deployment, scaling, and management of containerized applications

- Declarative Configuration: Define desired state and let Kubernetes handle implementation details

- Service Discovery: Automatic detection and routing between services

- Self-healing and Resilience: Automatic recovery from failures

- Automated Scaling: Dynamic resource allocation based on demand

- Rolling Updates: Zero-downtime deployments and rollbacks

Kubernetes can be installed on any private, public, or hybrid cloud infrastructure, offering flexibility in deployment options.

Kubernetes Architecture

The Kubernetes architecture consists of a control plane and worker nodes:

- Control plane components manage the cluster state and orchestration:

- ETCD: Distributed key-value store for cluster data

- Kube-scheduler: Places workloads on appropriate nodes

- Kube-controller manager: Manages node lifecycle and replication

- Kube-API server: Central communication hub for all components

- Worker node components run application workloads:

- Kubelet: Node agent that manages container workloads

- Kube-Proxy: Handles network communication between containers

Custom Resources and Controllers

Kubernetes allows extending its API through Custom Resources and Custom Controllers. These enable architects to build specialized platforms on top of Kubernetes for domain-specific abstractions and automation:

- Custom Resources are extensions to the Kubernetes API

- Custom Controllers keep the state of Kubernetes objects in sync with the desired state

Together, these enable organizations to create purpose-built automation for their specific workloads and requirements.

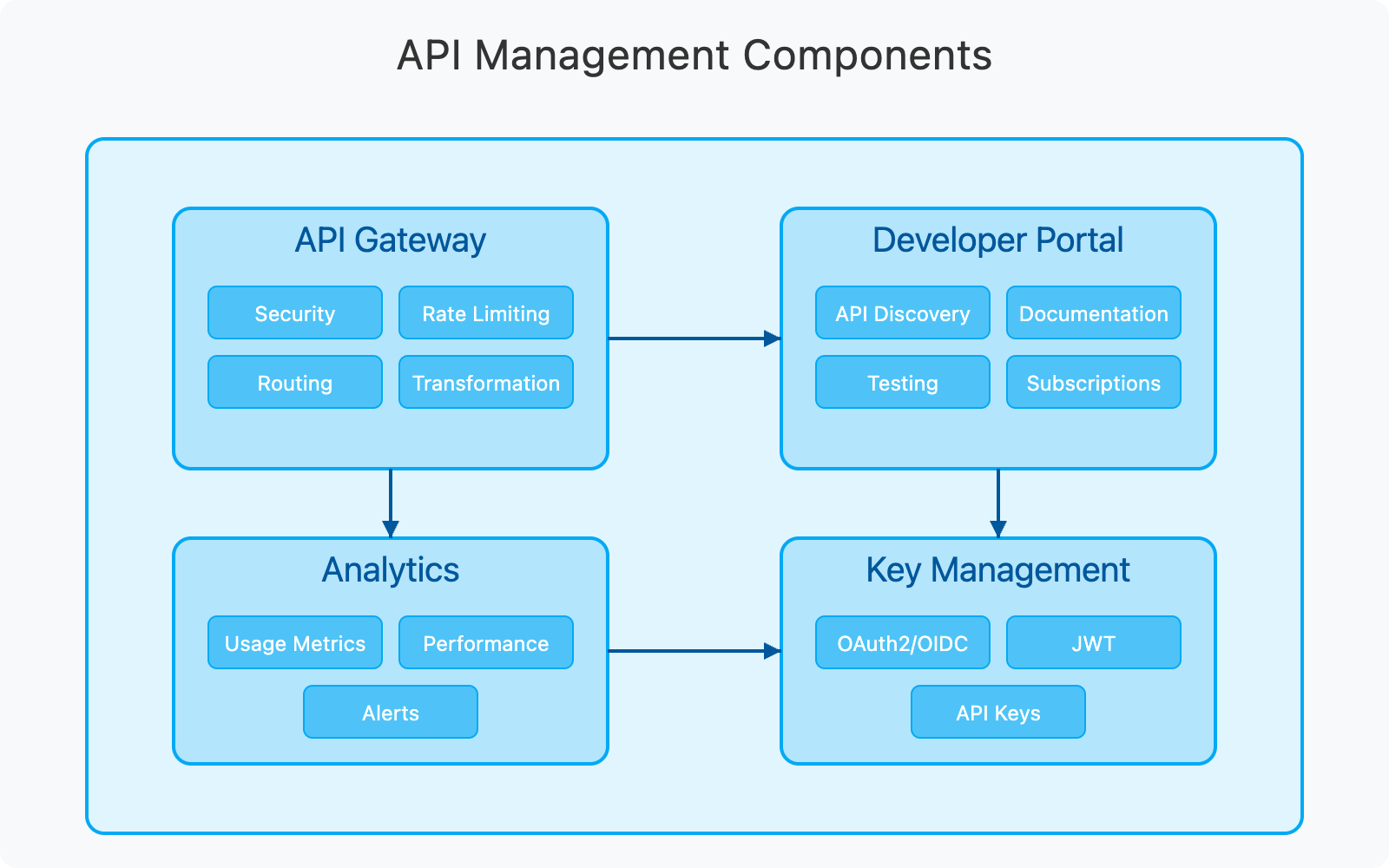

2. API Management: The Experience Layer

A robust API management platform is essential for exposing microservices as managed APIs both internally and externally. This layer handles API lifecycle management, security, and developer experiences.

The API management layer provides:

- API Gateway: Serves as the front door for all API traffic with security, routing, and policy enforcement

- Developer Portal: Enables developers to discover, test, and subscribe to APIs

- Key Management: Handles security tokens, authentication, and authorization

- Analytics: Provides insights into API usage, performance, and business value

This layer is crucial for creating a consistent and secure experience for API consumers while enabling proper governance and monetization of APIs.

Let me continue creating a more user-friendly, visually appealing guide to implementing a cloud native digital enterprise architecture, focusing on the Microservices and Integration section.

Full API Lifecycle Management

A comprehensive API management solution enables organizations to design, develop, publish, and monitor APIs. The platform should provide:

- API design and development tools with Open API Specification support

- Versioning and lifecycle management capabilities

- Documentation generation and publishing tools

- Monetization options for APIs

Developer Portal for API Ecosystem

The developer portal serves as a hub for discovering and onboarding APIs with minimal friction. It enables developers to:

- Find and test APIs before subscription

- Access comprehensive documentation

- Generate client code

- Manage subscriptions and API keys

Traffic Management and Quality of Service

An essential capability of an API management platform is the ability to regulate API traffic and provide different service levels to consumers:

- Rate limiting and throttling policies

- Traffic prioritization

- SLA enforcement

- Protection against traffic spikes

API Security

Comprehensive security is critical for protecting exposed business capabilities:

- Authentication via OAuth 2.0, JWT, Basic Auth, and API Keys

- Authorization and access control

- Threat protection

- Payload analysis and validation

Analytics and Business Intelligence

Analytics capabilities provide valuable insights into API usage and business impact:

- Usage statistics and patterns

- Performance metrics

- Anomaly detection

- Business intelligence for monetization

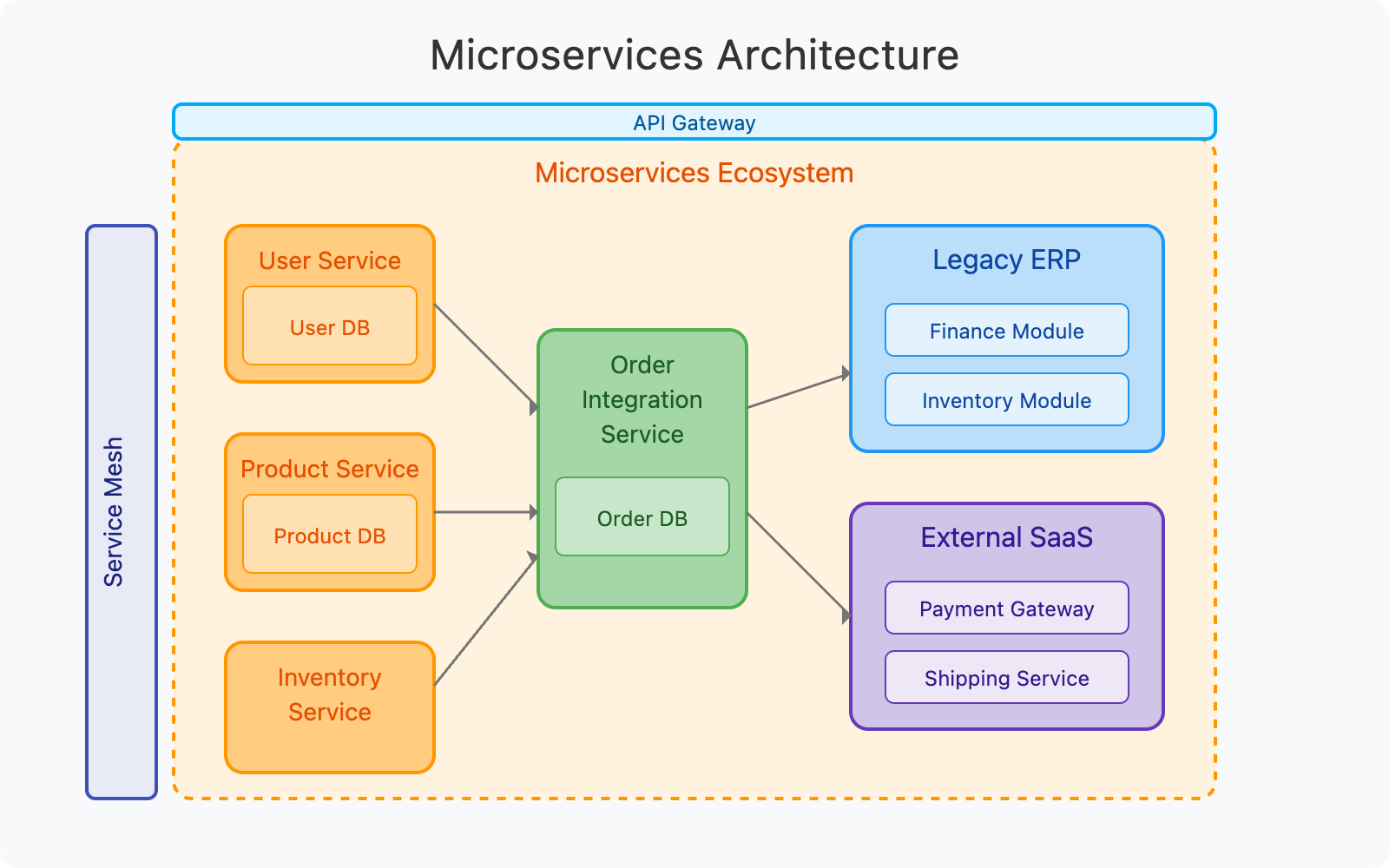

3. Microservices and Integration

Microservices architecture breaks down applications into small, independent services that can be developed, deployed, and scaled independently. This architectural approach is fundamental to building cloud native applications that are resilient, scalable, and maintainable.

The microservices ecosystem typically includes:

- Core Domain Services: Focused business capabilities like user management, product catalogs, and inventory control

- Integration Services: Combine multiple core services to deliver complex business processes

- Legacy System Connections: Adapters and bridges to connect with existing enterprise systems

- External SaaS Integration: Connect with third-party systems like payment processors and shipping services

- Service Mesh: Communication infrastructure that handles service-to-service interactions

- API Gateway: Entry point for client applications accessing microservices

Implementing microservices involves several key considerations:

Service Design Patterns

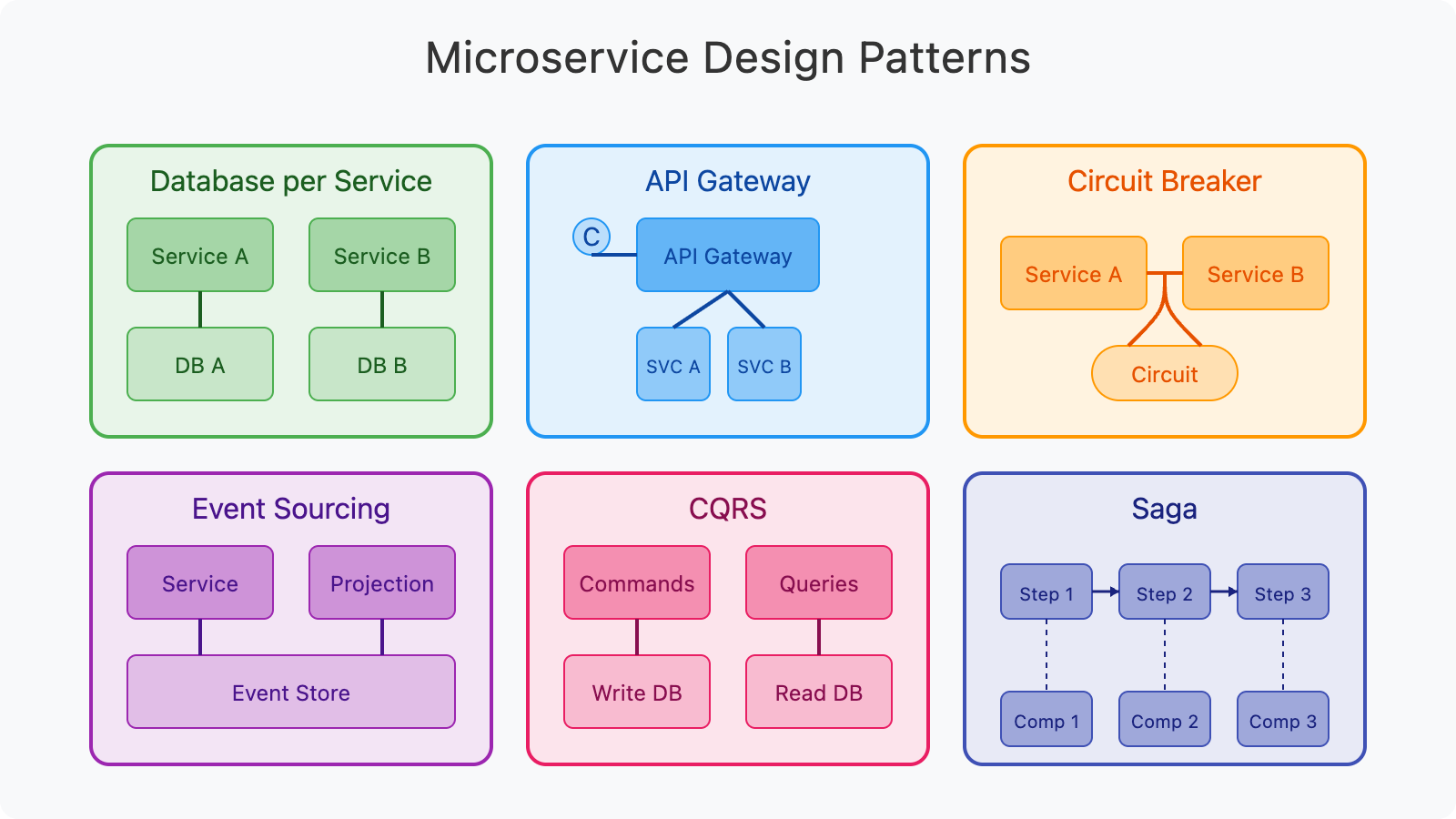

To implement effective microservices, several design patterns should be considered:

- Database per Service: Each microservice maintains its own database, ensuring loose coupling

- API Gateway: A single entry point that routes requests to appropriate services

- Circuit Breaker: Prevents cascading failures by gracefully handling service failures

- Event Sourcing: Captures all changes to application state as a sequence of events

- CQRS (Command Query Responsibility Segregation): Separates read and write operations

- Saga: Manages distributed transactions across multiple services

Gateway Deployment Patterns

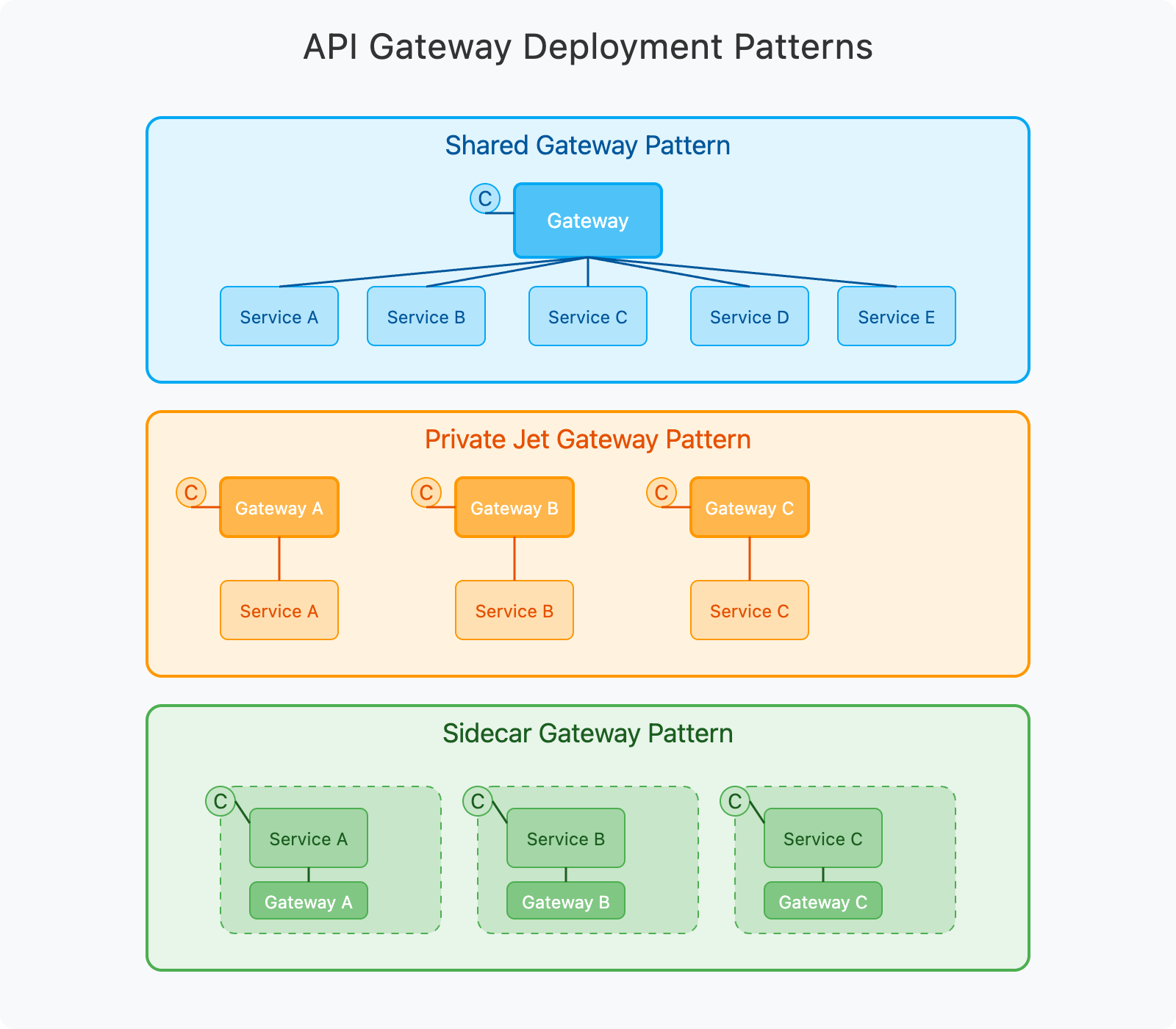

For API gateway deployment in a microservices architecture, three main patterns are available:

- Shared Gateway Pattern:

- A centralized gateway cluster handles all API requests

- All services are exposed through a single entry point

- Simplifies management but can create a scaling bottleneck

- Private Jet Gateway Pattern:

- Dedicated gateway per business domain or service group

- Allows independent scaling of gateways with their services

- Better isolation but requires more resources

- Sidecar Gateway Pattern:

- Gateway deployed as a sidecar alongside each service

- Maximum isolation and independent scaling

- Used in service mesh architectures

- Increased complexity in management

Integration Microservices

In traditional SOA, integration occurred through a centralized ESB (Enterprise Service Bus). In microservice architectures, this centralized approach conflicts with the principles of decentralization. Instead, integration functionality is distributed through specialized “integration microservices” that:

- Connect multiple core services to implement complex business processes

- Transform data between different formats and protocols

- Implement resiliency patterns like circuit breakers and retries

- Provide an abstraction layer over legacy systems and external services

These integration microservices should be lightweight, focused on specific business flows, and independently deployable like any other microservice.

Health Check and Auto-Healing

Kubernetes provides robust mechanisms for ensuring service health and automatic recovery:

- Startup Probes: Monitor initialization tasks when a container starts

- Readiness Probes: Determine when a service is ready to accept traffic

- Liveness Probes: Detect whether a service is functioning properly

Through these probes, Kubernetes can automatically restart failing services, remove unhealthy instances from load balancing, and replace them with new instances. This self-healing capability is essential for maintaining high availability in a microservices architecture.

Autoscaling

Autoscaling is critical for handling variable workloads efficiently. Kubernetes provides Horizontal Pod Autoscaler (HPA) that can automatically adjust the number of pod replicas based on:

- CPU utilization

- Memory usage

- Custom metrics from applications

- External metrics from monitoring systems

This capability ensures that services can scale up during peak demand periods and scale down during quieter periods, optimizing resource usage and cost.

4. Observability: Monitoring the Ecosystem

A critical aspect of any cloud native architecture is comprehensive observability. In distributed systems, traditional monitoring approaches are insufficient.

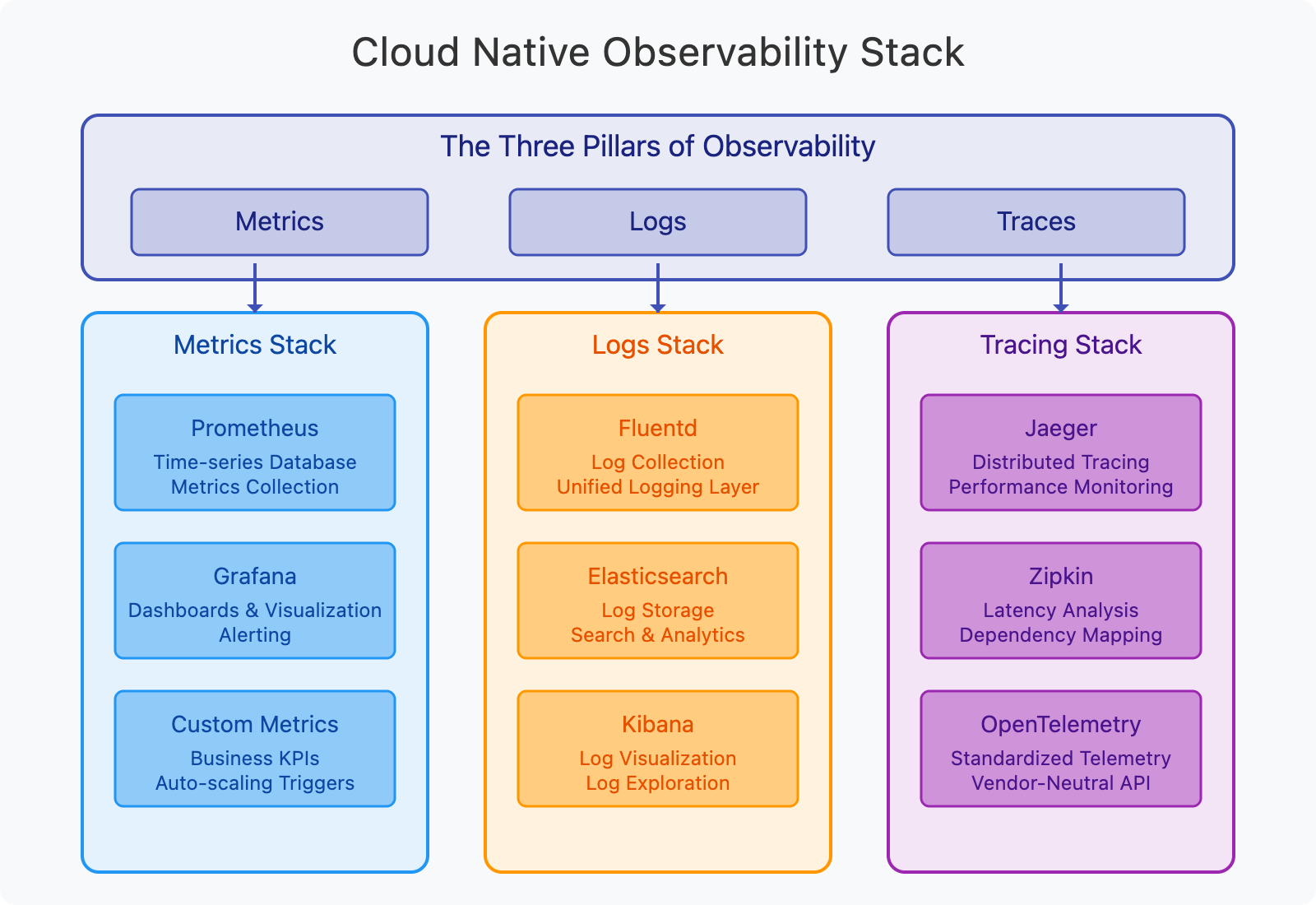

Cloud native observability is built on three pillars:

- Metrics: Numerical data collected at regular intervals

- Prometheus: Time-series database for metrics collection

- Grafana: Visualization and dashboards

- Custom metrics: Business KPIs and auto-scaling triggers

- Logs: Detailed records of events and activities

- Fluentd: Unified logging layer

- Elasticsearch: Log storage and search

- Kibana: Log visualization and exploration

- Traces: Tracking requests as they flow through multiple services

- Jaeger: Distributed tracing system

- Zipkin: Latency analysis

- OpenTelemetry: Standardized instrumentation

A robust observability implementation should:

- Provide clear visibility into system health

- Enable rapid problem diagnosis

- Support capacity planning and performance optimization

- Offer business insights through analytics

5. Continuous Integration and Deployment (CI/CD)

To fully realize the benefits of a cloud native architecture, implementing a robust CI/CD pipeline is crucial for automating the delivery of microservices.

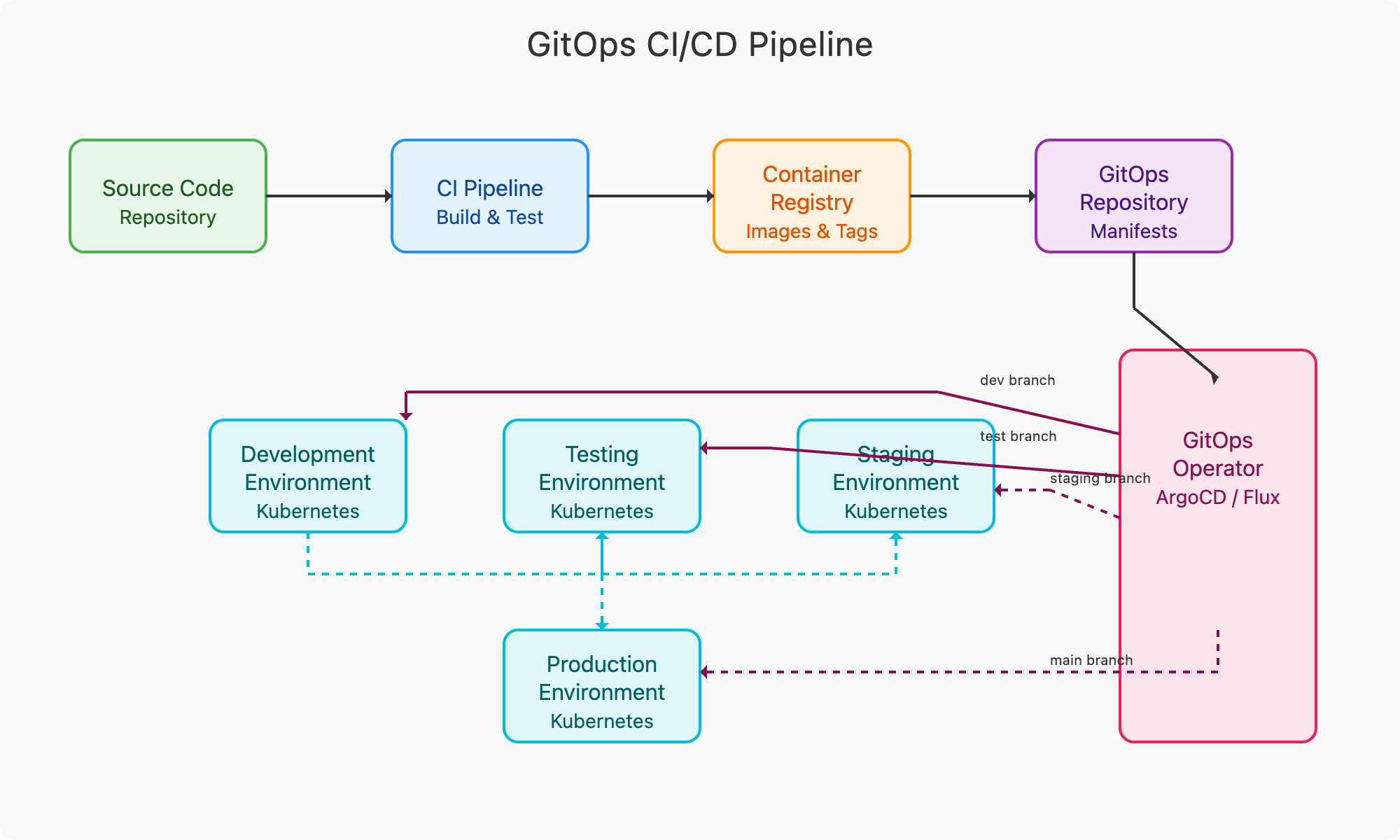

The GitOps approach to CI/CD combines Git as the single source of truth with automated deployment tools to provide a developer-centric experience for deploying microservices to Kubernetes environments. The pipeline typically includes:

Components of the CI/CD Pipeline

- Source Code Repository:

- Stores application code and infrastructure definitions

- Developers commit changes to trigger the pipeline

- Supports branching strategies for feature development

- CI Pipeline:

- Automatically builds and tests code on commit

- Runs unit tests, integration tests, and security scans

- Creates container images for deployment

- Container Registry:

- Stores versioned container images

- Acts as an immutable repository of deployable artifacts

- Provides scanning and security validation of images

- GitOps Repository:

- Contains Kubernetes manifests and configuration

- Defines the desired state of all environments

- Uses separate branches for different environments

- GitOps Operator:

- Watches for changes in the GitOps repository

- Automatically applies changes to target environments

- Tools like ArgoCD or Flux implement this pattern

Environment Promotion Flow

The typical flow through environments follows this pattern:

- Code changes are committed to the source repository

- CI pipeline builds, tests, and creates container images

- CI updates the GitOps repository with new image tags

- GitOps operator detects changes and deploys to development

- After validation, changes are promoted to testing (via merge or manual trigger)

- After testing, changes are promoted to staging and eventually production

This approach provides several benefits:

- Consistency: The same deployment process is used across all environments

- Auditability: All changes are tracked in Git with commit history

- Reliability: Automated processes reduce human error

- Reversibility: Easy rollback to previous versions if issues are detected

Deployment Strategies

To achieve zero-downtime deployments and manage risk effectively, several deployment strategies can be implemented:

Ramped (Rolling) Deployment

The ramped or rolling update strategy gradually replaces instances of the old version with the new version. Kubernetes handles this automatically by starting new pods while terminating old ones, maintaining a minimum number of available pods during the process.

Blue/Green Deployment

In blue/green deployments, two identical environments (blue and green) are maintained. The new version is deployed to the inactive environment, and once verified, traffic is switched from the active environment to the newly updated one. This provides an immediate rollback capability if issues are detected.

Canary Deployment

Canary deployments roll out changes to a small subset of users before making them available to everyone. This allows testing the new version with real users and real traffic, while limiting the impact of potential issues. Once the canary is verified, the deployment can be gradually expanded to all users.

A/B Testing

A/B testing routes a subset of users to a new version under specific conditions to test the effectiveness of changes. This approach is particularly useful for measuring the impact of user experience changes or new features.

Conclusion

Implementing a cloud native digital enterprise architecture requires thoughtful integration of multiple technologies and patterns. By combining Kubernetes as the foundation with robust API management, microservices design patterns, comprehensive observability, and automated deployment pipelines, organizations can create a platform that enables agility, scalability, and innovation.

Key takeaways for a successful implementation:

- Adopt an API-first approach to expose business capabilities as managed products

- Design microservices with clear boundaries using domain-driven design principles

- Choose appropriate gateway patterns for your specific requirements

- Implement comprehensive observability across metrics, logs, and traces

- Automate deployment through GitOps to ensure consistency and reliability

This reference architecture provides a blueprint that can be adapted to different industry needs and scaled appropriately for organizations of all sizes. As the cloud native ecosystem continues to evolve, this architecture can incorporate new tools and patterns while maintaining the core principles that enable digital transformation.